Handcrafting Non-uniform Grid Refinement for Modern GPUs

At the heart of many natural phenomena and engineering challenges lies the intricate dance of fluids. From the way air wraps around a flying vehicle to the turbulent paths of ocean currents, understanding fluid dynamics is crucial. However, one of the perennial challenges in numerical simulation of such flows is the vast range of length scales involved. Capturing both the broad stroke of flow patterns and the minute eddies that arise due to multiscale geometrical features and the inherent dynamics of a turbulent flow becomes a formidable challenge. Consider the numerical simulation of air flow around a passenger aircraft. First, the aircraft must be enclosed with a finite but large computational domain that resembles the open space flight conditions. Additionally, geometric features as thin as the winglets must be resolved. To achieve both these requirements, tiling the entire computational domain uniformly quickly overwhelms compute and storage resources, often rendering the problem impracticable.

This is where non-uniform grid refinement methods come into play, offering a way to adjust the level of detail across different regions of the simulated flow. By focusing computational resources on areas of interest while keeping a broader view elsewhere, these techniques enable large-scale simulation of flows in open environments that are otherwise computationally infeasible.

The various levels of details that need to be captured in a virtual wind tunnel, from a large domain containing the vehicle(top) to small geometric features impacting the quality of the resulting flow (bottom).

A GPU-tailored Grid Refinement Algorithm

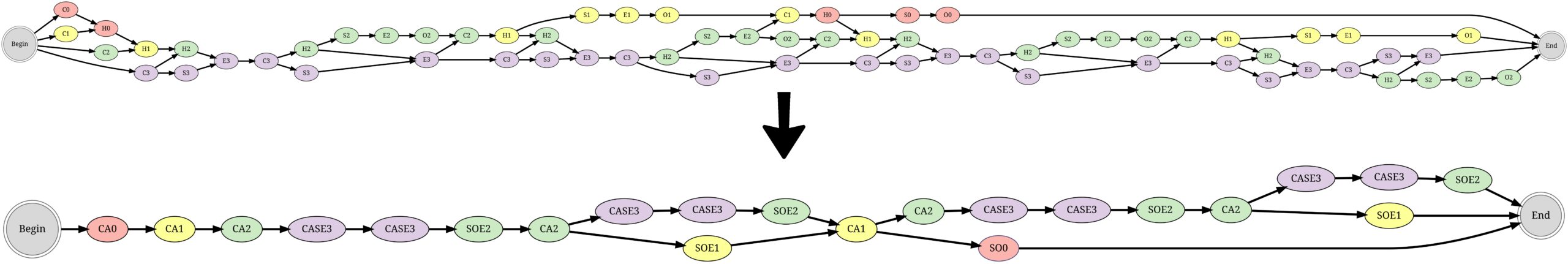

In a recent article accepted at the 38th IEEE International Parallel & Distributed Processing Symposium, Autodesk Research introduced a new methodology that carefully orchestrates the parallel workload of a grid refinement algorithm for the GPU architecture. This work is based on a special class of computational fluid dynamics called the the the Lattice Boltzmann Method (LBM). Our improvements represent a significant step forward in the efficiency and performance of fluid dynamics simulations. By tailoring the grid refinement process to better leverage the parallel processing capabilities of the GPU, we managed to reduce computation times and increase the fidelity of simulations. This optimization process involved rethinking the computational algorithms and careful consideration of the GPU’s memory management and processing capabilities.

Among these optimizations, we leverage kernel fusion: this combines multiple kernels/computations into one, reducing the time spent on data transfers and synchronization between different stages of the calculation while improving the overall computational efficiency.

Implementing kernel fusion required a deep dive into the intricacies of the underlying algorithm for solving the fluid flow dynamics based on the LBM approach and the architecture of modern GPUs. We meticulously analyzed the data dependencies between different computational steps, identifying opportunities for fusion. As a result, our team defined a faster multi-resolution LBM method without sacrificing the method’s accuracy or stability. This analysis led to a reimagining of the LBM algorithm, where different steps could be efficiently combined.

More Details in Less Time

By enhancing the efficiency of the nonuniform grid refinement algorithm on the GPU, we have achieved remarkably detailed LBM simulations in less time. These improvements are particularly consequential in complex scenarios, such as airflow in urban environments or aerodynamic analysis of a vehicle inside a wind tunnel, where the ability to capture fine-scale features (e.g., flow in the balconies or near the car surface) can significantly affect the accuracy of the simulation. These results demonstrate an increase in speed and an improvement in the quality of the simulations, enabling a more nuanced understanding of fluid dynamics that enables better and more informed designs.

As an example, on a single Nvidia A100 GPU with 80 GB memory, our algorithm allows simulations of unprecedented domain size with 1596×840×840 cells (measured at the finest level) with three levels of refinement. Additionally, compared to the state-of-the-art implementations of LBM-based techniques either on the CPU or GPU, our optimized code is at least an order of magnitude faster.

Future Perspectives

These improvements open new possibilities for fluid dynamics simulations. By making these simulations more accessible and efficient, Autodesk Research is paving the way for a broader range of applications, from environmental modeling to the design of energy-efficient vehicles and buildings. The ability to quickly and accurately simulate complex fluid flows has the potential to accelerate innovation across multiple fields and offer insights that can lead to more sustainable, effective solutions to pressing challenges. These include designing buildings with improved energy efficiency (with natural ventilation and cooling) or more aerodynamic efficient cars and wind turbines.

To further boost the performance and scalability of our optimized LBM grid refinement solution, we plan to target multi-GPU systems by leveraging project Neon’s capabilities (https://github.com/Autodesk/Neon). Moreover, in collaboration with multiple groups at Nvidia, we are leveraging Nvidia Warp to provide an easy-to-use Python interface that will be incorporated into XLB (https://github.com/Autodesk/XLB): a python library for accelerated CFD analysis. Both Neon and XLB are open-source projects through which Autodesk Research engages with the research community and industrial partners on challenging and exciting projects around HPC and CFD.

For further details on our optimized LBM grid solution and its integration with Neon and XLB, please check out our paper.

Get in touch

Have we piqued your interest? Get in touch if you’d like to learn more about Autodesk Research, our projects, people, and potential collaboration opportunities

Contact us