Recently Published by Autodesk Researchers

Autodesk Research teams regularly contribute to peer-reviewed scientific journals and present at conferences around the world. Check out some recent publications from Autodesk Researchers.

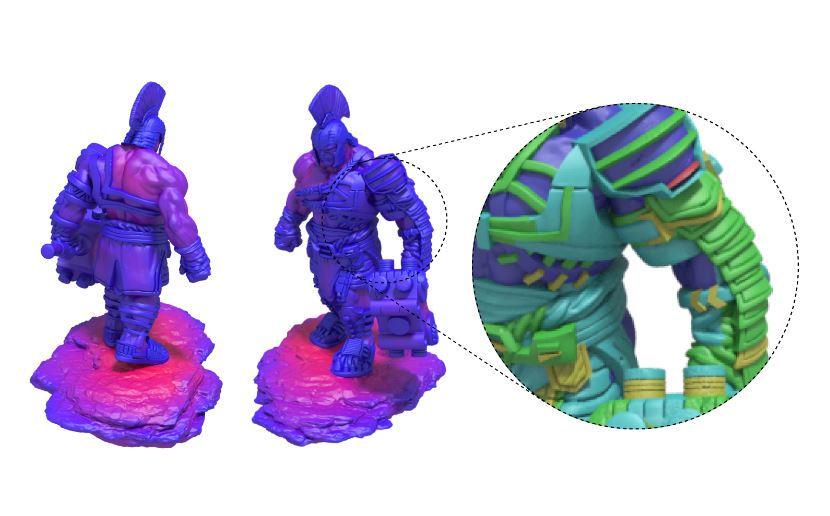

Neural Shape Diameter Function for Efficient Mesh Segmentation

Partitioning a polygonal mesh into meaningful parts can be challenging. In the last decade, several methods were proposed to tackle this problem at the cost of intensive computational times. Recently, machine learning has proven to be effective for the segmentation task on 3D structures. This paper presents a data-driven approach leveraging deep learning to encode a mapping function prior to mesh segmentation for multiple applications.

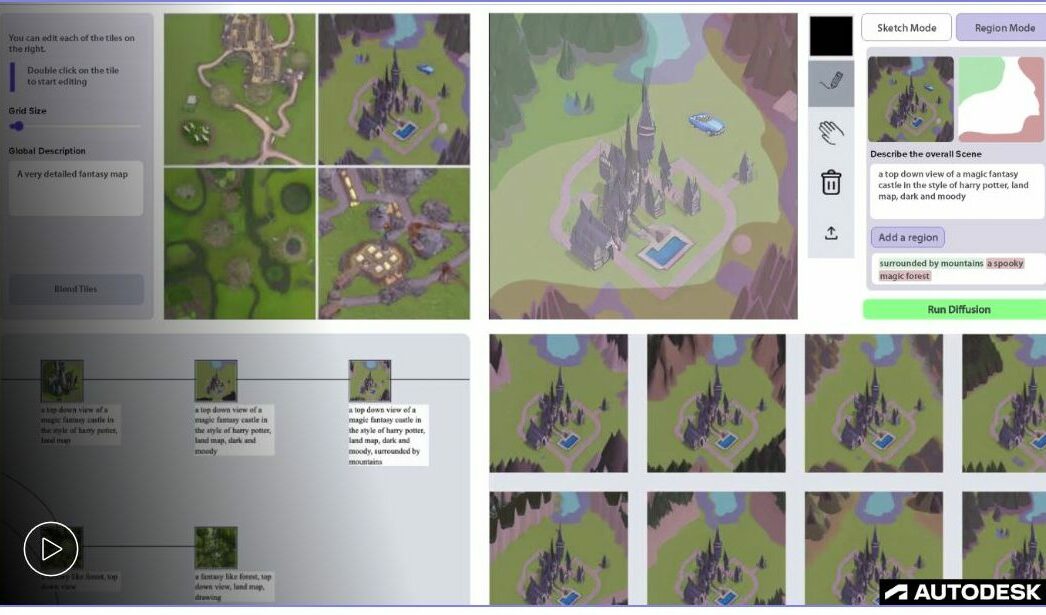

WorldSmith: Iterative and Expressive Prompting for World Building with a Generative AI

Crafting a rich and unique environment is crucial for fictional world-building but can be difficult to achieve since illustrating a world from scratch requires time and significant skill. In this paper, the team investigates the use of recent multi-modal image generation systems to enable users to iteratively visualize and modify elements of their fictional world using a combination of text input, sketching, and region-based filling. WorldSmith enables novice world builders to quickly visualize a fictional world with layered edits and hierarchical compositions.

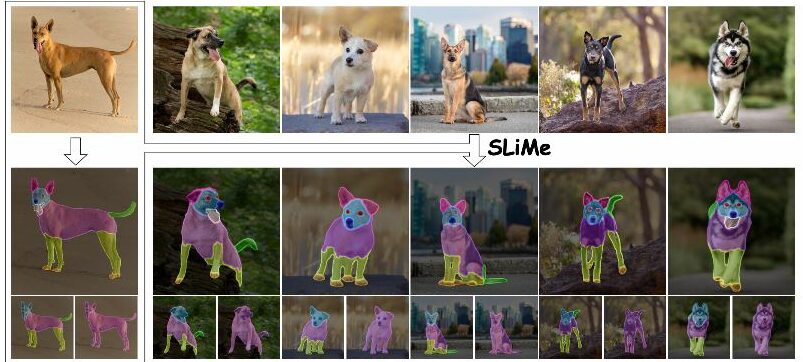

SLiMe: Segment Like Me

Significant strides have been made using large vision-language models, like Stable Diffusion (SD), for a variety of downstream tasks, including image editing, image correspondence, and 3D shape generation. Inspired by these advancements, the team explored leveraging these extensive vision-language models for segmenting images at any desired granularity using as few as one annotated sample by proposing SLiMe. SLiMe frames this problem as an optimization task leveraging additional training data to improve the performance.

Get in touch

Have we piqued your interest? Get in touch if you’d like to learn more about Autodesk Research, our projects, people, and potential collaboration opportunities

Contact us