Safe Self-Supervised Learning of Insertion Skills in the Real World using Tactile and Visual Sensing

Industrial insertion is a difficult task for robots to perform. As an example, think about inserting a USB plug – the tolerances are tight, the USB socket is often not visible, and even a slight misalignment could lead to failure. Tasks like these are easy for people as we rely on the sense of touch in our fingers and do well even without being able to see the USB socket. We can tell how the USB plug is held in our fingers and modify the insertion motion accordingly. Robots must learn how to plug a connector in, but learning with robots is inherently unsafe – a person needs to babysit the robot to make sure it’s not about to try something that will break the USB plug or socket in the process. So we asked the question: how can we get a robot to learn complex tasks like USB insertion safely while being able to adjust to small variations in how it’s holding the part or where the insertion socket is?

In our recent research paper [1] to be presented by our summer 2022 intern (Letian Max Fu) at the 2023 IEEE International Conference on Robotics and Automation (ICRA), we (Hui Li and Sachin Chitta from the Robotics Lab at Autodesk Research) collaborated with Ken Goldberg’s lab at UC Berkeley to build a system capable of achieving tasks like USB insertion. We start with a self-supervised data collection pipeline where the robot generates and labels its own data. It tries to do this safely by minimizing collision between the part and its surroundings using force-torque sensing at the robot wrist. Figure 1 shows the overview of our approach.

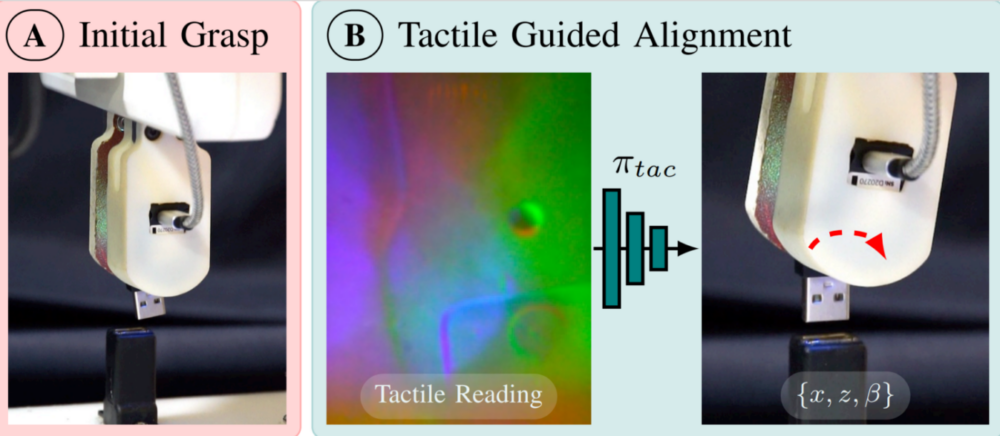

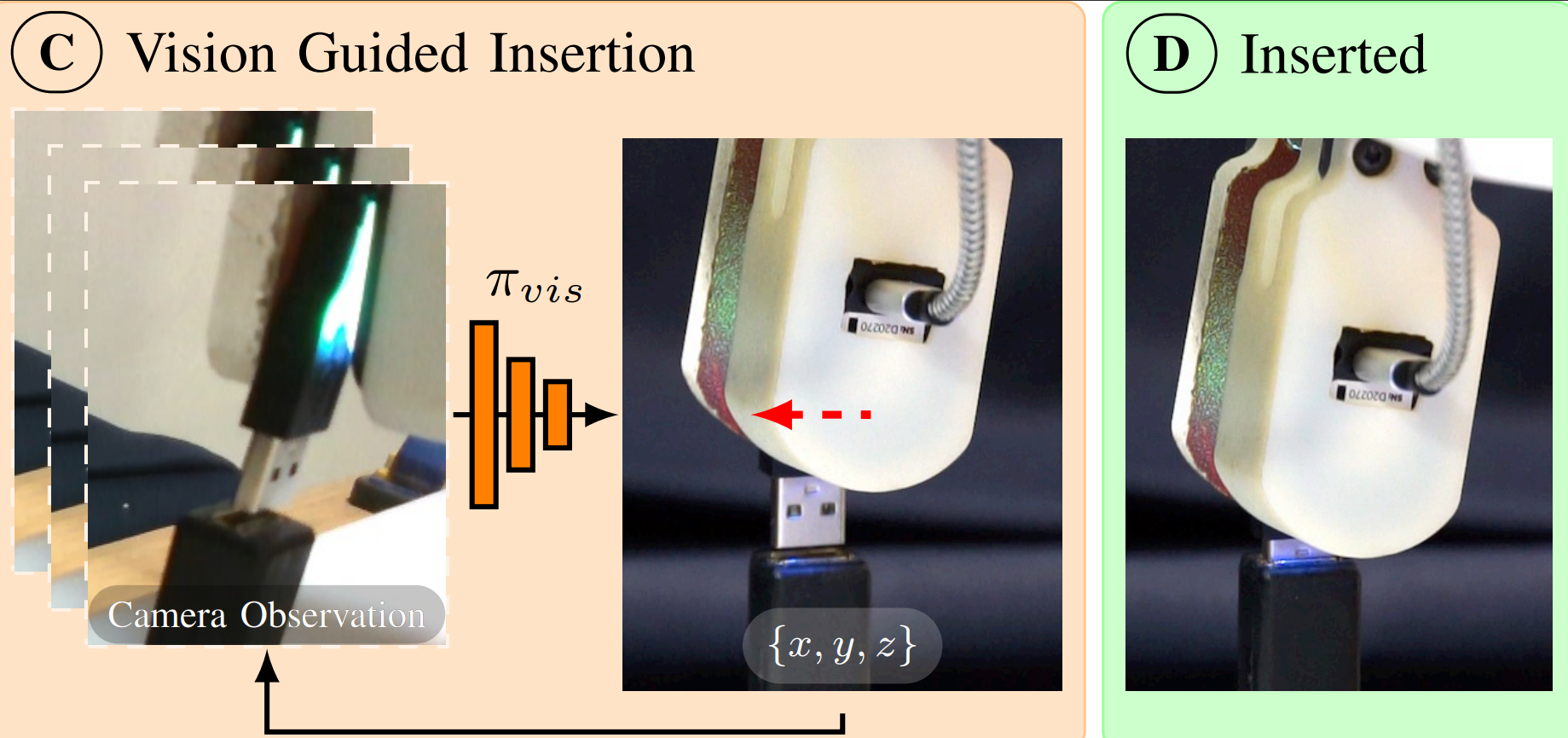

Fig. 1. Overview of the learned two-phase insertion policy: the red arrows indicating the robot actions given by the policies. (A) The robot grasps the part at an initial pose. (B) The tactile guided policy estimates the grasp pose using the tactile image and aligns the z-axis of the part with the insertion axis. (C) A vision guided policy is used to insert the part. (D) The part is inserted successfully into the receptacle.

In the Align phase, the robot uses tactile sensing to estimate how it’s holding the USB plug. It then re-orients the plug to align it with the insertion axis. In the next Insert phase, the robot learns to finish the insertion based on RGB images from the camera. The videos below show the safe data collection process for the two phases.

Separating the insertion task into these two phases allows us to formulate the problem in a manner that is safe to learn in comparison to a traditional reinforcement learning (RL) approach where safe exploration is challenging. Incorporating tactile feedback in learning for insertion adds another layer of difficulty because the grasped part frequently slips and rotates due to collision with the environment and the smooth surface of the tactile sensor gel pad. This makes RL methods difficult to succeed without human intervention or an automatic reset mechanism to detect and correct slippage. In this work, we develop a safe, self-supervised data collection pipeline with force-torque sensing, designed to minimize contact force and human input for data collection. This video shows that the robot figures out how it’s holding the USB plug, re-orients it, and finishes insertion.

So, what comes next for us in this line of research? A natural next step is to generalize our approach to accommodate different objects with varying geometries. Depending on the size and the surface texture of the object, tactile feedback can be difficult to learn and generalize. Moreover, insertion tasks can require varying degrees of forces to complete based on the tightness of the fit, so its impact on the tactile feedback and safe data collection based on force-torque sensing is also an exciting research area to explore.

[1] Letian Fu, Huang Huang, Lars Berscheid, Hui Li, Ken Goldberg, Sachin Chitta, Safe Self-Supervised Learning in Real of Visuo-Tactile Feedback Policies for Industrial Insertion, IEEE International Conference on Robotics and Automation (ICRA), 2023

Hui Li is a Senior Principal Research Scientist at Autodesk.

Get in touch

Have we piqued your interest? Get in touch if you’d like to learn more about Autodesk Research, our projects, people, and potential collaboration opportunities

Contact us