The Problem of Generative Parroting: Navigating Toward Responsible AI (Part 3)

In the previous installments of this series, we explored the pervasive issue of generative parroting in AI models, particularly focusing on the ethical and legal implications of generative models replicating their training data too closely. As generative AI continues to advance, it becomes crucial to develop methods that can effectively identify and mitigate instances of generative parroting. This third part delves into a novel and simple approach leveraging overfitted Masked Autoencoders (MAE) to detect generative parroting, ensuring responsible and ethical AI use.

Detecting Generative Parroting through Overfitted Masked Autoencoders

Introduction

Generative AI models like Stable Diffusion, DALLE, and GPT have revolutionized digital content creation, enabling users to generate text, images, and various other media forms effortlessly. However, this rapid advancement brings significant ethical, legal, and technical challenges, particularly in copyright infringement and data privacy. Generative parroting, where models output content closely mimicking their training data, stands at the heart of these issues.

Traditional methods to detect parroted content often involve exhaustive comparisons with vast datasets, which is computationally impractical for real-time scenarios. To address this, our research proposes a novel solution using an overfitted MAE. This approach effectively identifies parroted samples without needing extensive pairwise comparisons, ensuring efficient and scalable detection.

Methodology

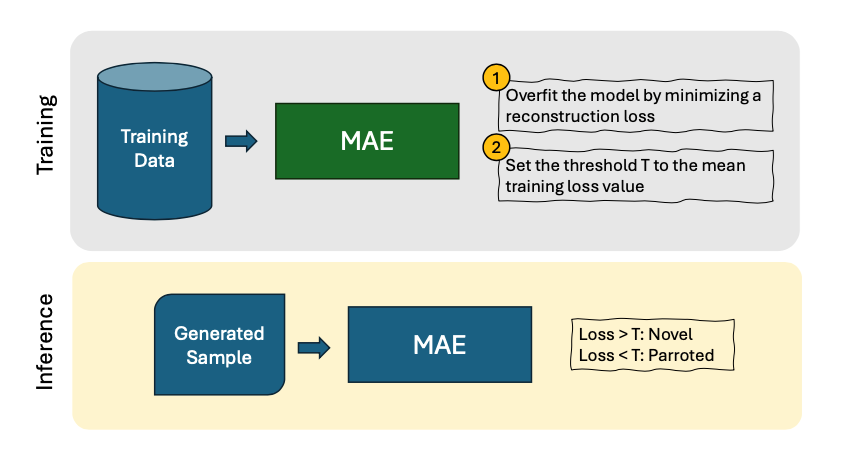

Our approach hinges on the MAE’s tendency to overfit its training data, enabling it to distinguish between samples closely aligned with the training data and those that are novel or significantly altered. The detection mechanism is based on the reconstruction loss: a lower loss indicates higher similarity to the training data, flagging potential parroted content.

Dataset

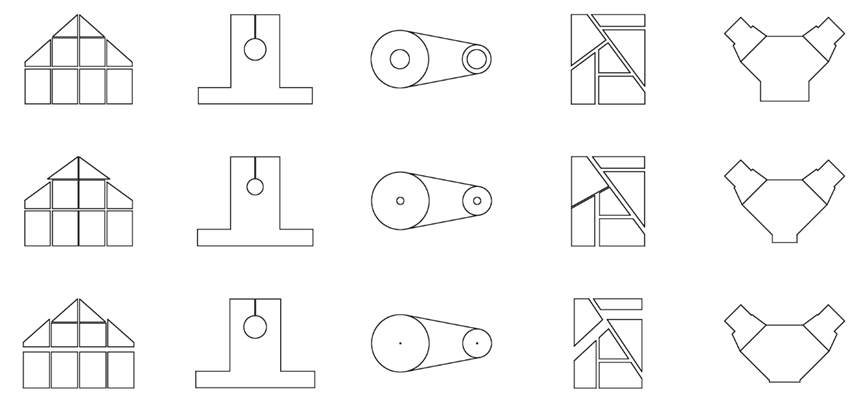

For our experiments, we utilized the SketchGraphs dataset, consisting of over 500,000 CAD sketches. We created two variations of each sketch to simulate minor and substantial modifications, representing potential generative deviations. The dataset was divided into four subsets:

- Training set: Original sketches.

- Modified set 1: Slightly modified sketches.

- Modified set 2: Substantially altered sketches.

- Novel set: Completely new, unseen samples.

Overfitting and Threshold Setting

We trained the MAE on original sketches until the loss reached a minimal value, setting a threshold based on the mean loss across original sketches. Samples with a reconstruction loss below this threshold are flagged as parroted. This method ensures efficient real-time detection, crucial for designers and other users who require immediate feedback. Modified sets 1&2 and the Novel set are used to check how good the overfitted model is at detecting modified samples.

Experiments and Results

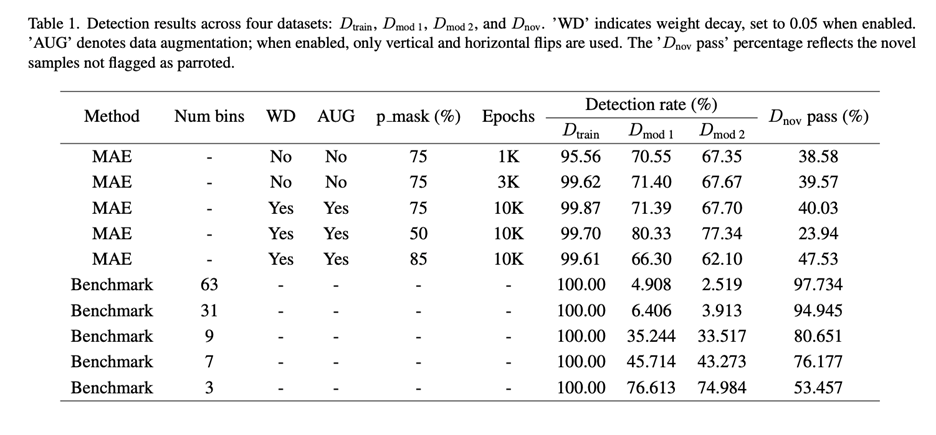

Our experiments employed a Vision Transformer-based MAE. We varied training durations and model parameters, observing that extended training improved detection rates for training and modified samples but also increased the likelihood of false positives in novel samples. The balance between sensitivity and specificity was critical, with a lower masking percentage (p mask) significantly enhancing detection capabilities for modified samples.

Benchmark Comparison

We compared our method against graph hashing approaches commonly used for evaluating the novelty of generated CAD models. Our MAE-based detector outperformed these benchmarks in detecting parroted content, highlighting its utility in real-world applications where both topology and geometry of sketches may vary.

Discussion

Our findings indicate that overfitted MAEs are effective in detecting generative parroting, with the model’s configuration playing a crucial role in balancing detection accuracy and false positives. The MAE’s superior performance, compared to graph-based hashing benchmarks, underscores its potential for scalable detection across various data modalities.

Conclusion

We have presented a simple yet effective approach for detecting generative parroting using an overfitted Masked Autoencoder. This method offers a practical and scalable solution to a significant challenge in generative AI, ensuring that AI-generated content respects copyright integrity and fosters trust in generative technologies. Future research will explore alternative architectures, different data modalities, and more sophisticated thresholding techniques to further enhance detection capabilities.

By advancing detection methods for generative parroting, we contribute to the ongoing discourse on ethical AI development, promoting responsible use of generative models without compromising on creativity.

Stay tuned for further insights and developments in responsible AI as we continue to navigate the complexities of generative AI and its implications.

Acknowledgments

We extend our gratitude to Ali Mahdavi-Amiri from Simon Fraser University and Karl D.D. Willis from Autodesk Research for their valuable insights and initial discussions on this work. Their contributions have been instrumental in shaping this research.

For more detailed information and to explore the complete study, refer to the published paper.

Get in touch

Have we piqued your interest? Get in touch if you’d like to learn more about Autodesk Research, our projects, people, and potential collaboration opportunities

Contact us